Skynet wants your Passwords! – AI and Social Engineering

As we explore the capabilities of modern AI in assisting us across both our personal and professional lives, malicious actors too are investigating its potential uses. In this series of blog posts, we delve into the realm of social engineering attacks that we need to be prepared for in this era of advanced AI. We aim to identify these threats and understand how to effectively defend against them. The initial post focuses on examining current attack scenarios and potential future developments.

Computers have significantly changed the scale at which we can process data, conduct simulations or communicate with one another. Similarly, AI may change the scale at which tasks that previously required humans can be conducted. For instance, crafting personalized emails for a thousand individuals formerly demanded humans to compose a thousand separate emails. Now it is feasible to instruct an LLM (large language model) like ChatGPT to create these mails.

Just like any powerful tool, AI can be harnessed for both constructive and harmful purposes. While individuals might leverage AI to delegate email composition, similarly, attackers could exploit it to generate phishing emails. In the pre-AI era, executing social engineering attacks with a high success rate necessitated tailoring approaches to individual victims. Given the substantial effort involved, sophisticated social engineering attacks were predominantly aimed at high-value targets, such as orchestrating a CFO to initiate fund transfers. Today, AI tools enable mass customization of attacks, substantially diminishing the requisite investment. Consequently, we can anticipate a surge in highly targeted social engineering attacks directed at a broader audience.

State of the art AI technologies yield content that closely resembles human-generated output (e.g., text, drawings) or closely mimics reality (e.g., images). Unsuspecting individuals are prone to perceiving such content as genuine, rendering them susceptible to automated social engineering attacks.

Avenues of Abuse

AI empowers malicious actors with an array of social engineering techniques, including:

- Generating realistic images of individuals in order to impersonate them

- Synthesizing someone’s voice for phone calls and other audio content

- Creating convincing text for e-mails and chat messages, capable of fooling even the most careful readers

The instances of these attacks witnessed in recent times only scratch the surface. As AI technology continues to progress, we can expect to see increasingly sophisticated and varied attack vectors, including video impersonation and other forms of “deepfake” media.

Autonomous AI and the Future

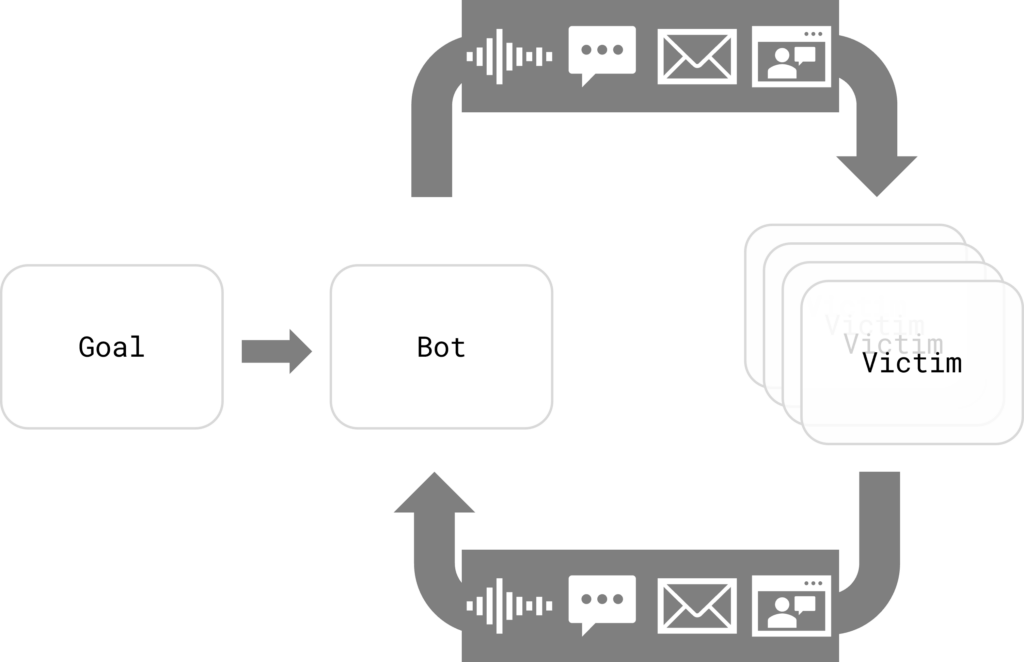

Autonomous agents, exemplified by systems like AutoGPT, bring a new level of flexibility to the realm of AI. Rather than assigning singular tasks to AI, autonomous agents can undertake a multitude of individual actions to achieve broader objectives. For instance, an attacker might set up an autonomous AI and direct it to carry out financial theft. The AI could then systematically execute all requisite steps: identifying targets, gathering relevant information, tailoring personalized attack strategies, creating websites, email addresses, and phone lines, implementing the attack itself, and adjusting its tactics as needed.

By eliminating the human factor, these fraudulent schemes could operate at an unparalleled scale and pace. As autonomous agents advance in sophistication, they could concurrently target multiple victims and dynamically adapting their approaches based on the responses received.

Conclusion

Regardless of whether AI is overhyped, there’s no denying the potential impact of AI technologies on our lives. In many regards, the cat’s out of the bag – since the technology is widely available it will be used. This is especially true for malicious actors who will not follow any legal limitations that may be set going forward. We will therefore need to be prepared to defend against these kinds of attacks, which we will explore in a future post.

In our upcoming blogpost, we will delve into the current defenses against AI-powered attacks. Stay tuned for an in-depth exploration of the cybersecurity implications of AI, and how we can best prepare for the challenges ahead.

A list of all available posts of this series is also accessible under the following link.

1 Throughout this series, we will employ the terms AI and ML (Machine Learning) interchangeably. While the series is technically centered around ML, it’s worth noting that in the public sphere, the term AI has effectively become synonymous with this term.